Hey, Google! Guess what? Meya just launched an Actions on Google integration!

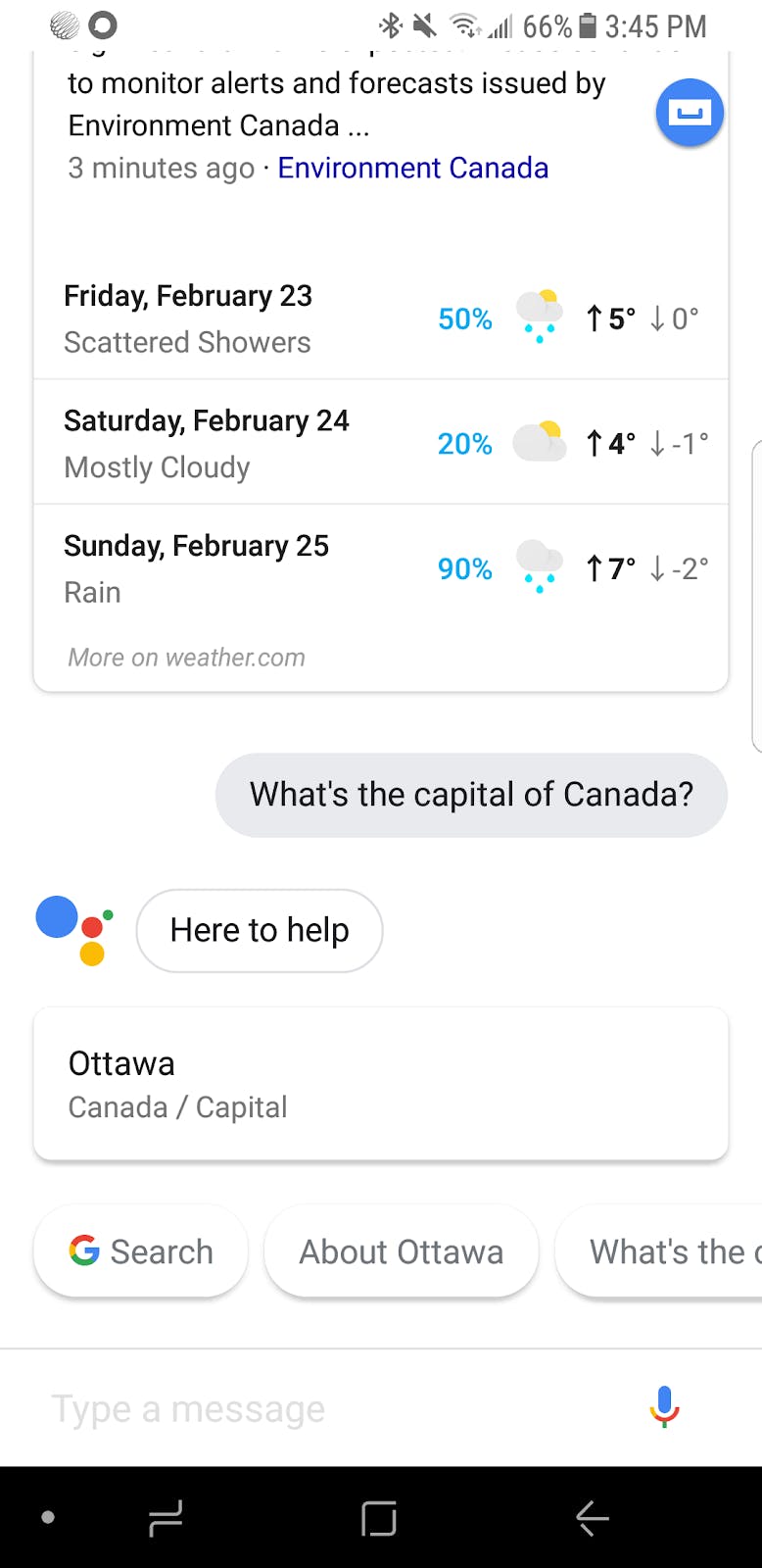

Google Home and Amazon Echo speakers were a hit this holiday season. The Alexa app for Amazon Echo speakers topped App Store free charts on Christmas day, with Google Home also in the top 10.

To enable your business to take advantage of this channel, Meya is excited to launch an integration for Actions on Google! Your customers can now interact with your cognitive app through both messaging and voice, enabling a new era of user experience.

With the Meya.ai Actions on Google integration, you can build cognitive apps for the Google Assistant. Google Assistant is currently on 400M+ devices, including Google Home smart speakers, phones, cars, TVs, headphones, and wearables. Your smart home and internet of things hardware can also be controlled and accessed through Google Assistant.

More and more consumers are becoming comfortable using their Google Assistant to find answers to their questions, or complete tasks. The interactions are more personal than other channels, providing a unique opportunity to drive engagement.

Google has also made it really easy for consumers to find your cognitive app. They’ve created a directory that anyone can search, making it easy for new users to find your company.

Development

We’ve made it as easy as possible for you to take your existing cognitive apps and add the Actions on Google integration. We’ve added new components, properties and intents that are voice specific, as well as extended the functionality of our framework to enable both text and voice.

There will be some adaptation necessary, but for the most part, you won’t need to change what you’ve already built for text based messaging.

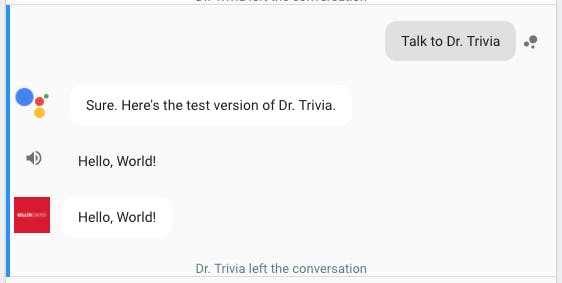

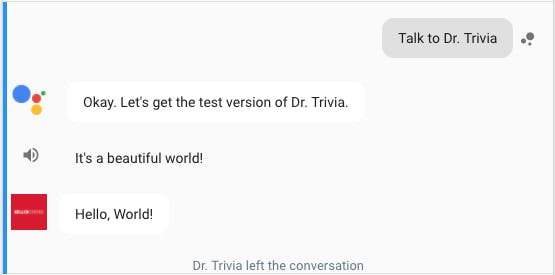

Actions on Google provides a simulator that is very similar to Meya’s built-in test chat. Both can be used to test your cognitive app as you develop.

User experience

For your customers to interact with your cognitive app, it’s as simple as saying “Hey Google, talk to {{insert your cognitive app’s name here}}.”

You can also trigger a specific flow using a keyword phrase or a natural language intent.

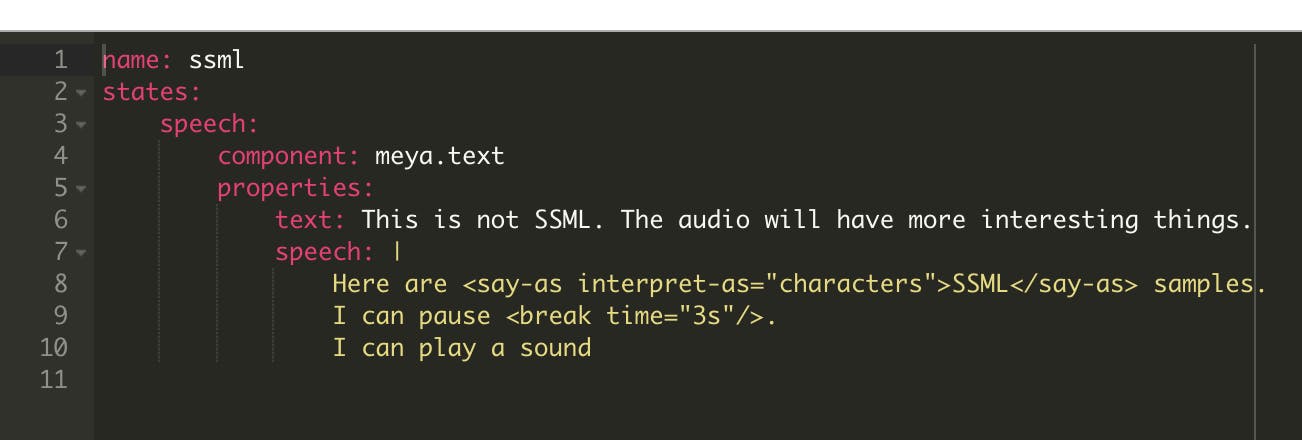

Within Meya, you can use Speech Synthesis Markup Language (SSML) to modify the speech of your cognitive app and make it seem more life-like. Use the speech property in a state to clarify how the bot should pronounce words, acronyms, numbers, and dates, as well as specifying things like emphasis and breath pauses with SSML.

For more information, check out the Actions on Google SSML reference.

Google goes further into voice design principles and methodology in their Actions on Google documentation.

Some important considerations for voice-enabled cognitive applications:

- Voice messaging relies heavily on natural language processing. You’ll need to make some updates if your cognitive app uses cards, buttons or quick replies.

- Images, gifs, and video, will understandably not be visible in a voice conversation.

- Users are much more likely to try to try things in an unexpected order with voice. You’ll want to add ways for them to do this within your cognitive app.

- Google expects a response within 5 seconds before ending the conversation with the user. Longer running components may not be suitable for this integration.

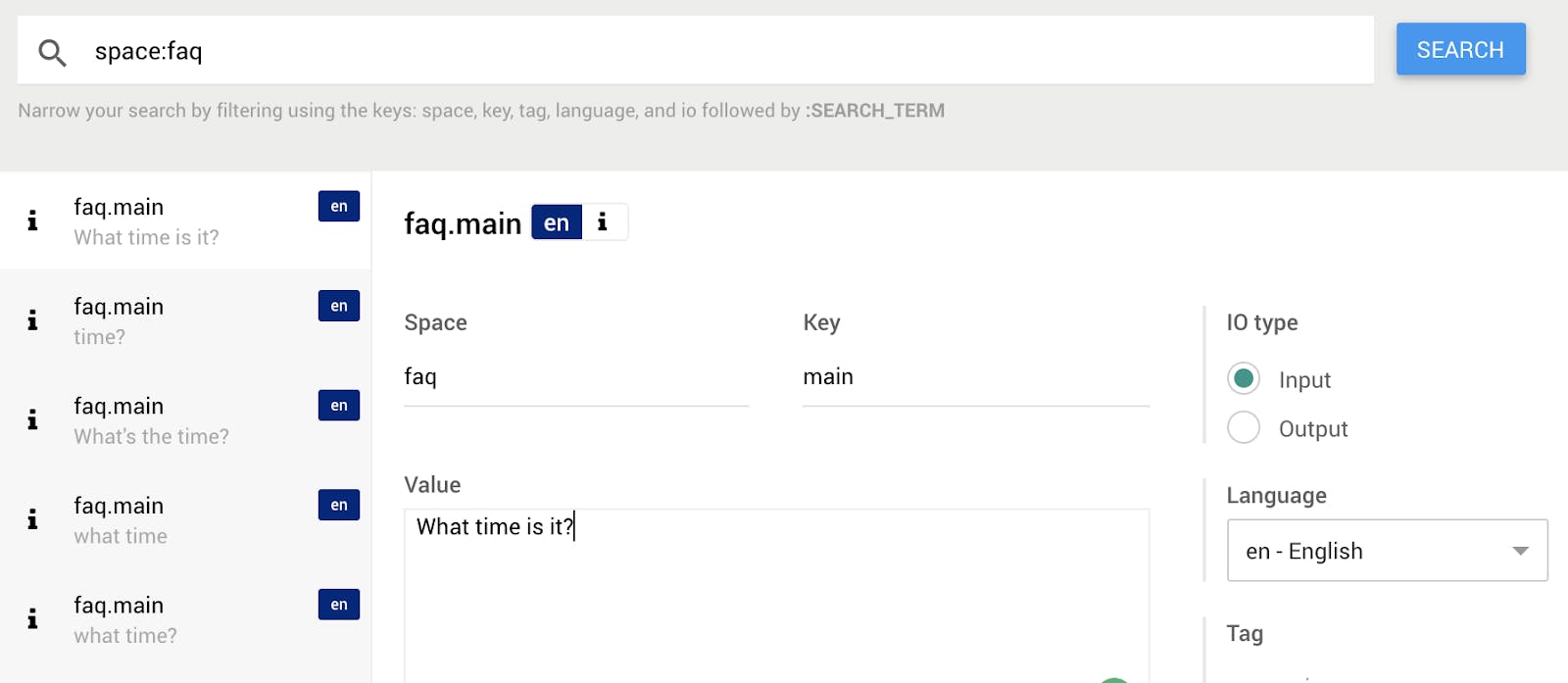

- “High” and “hi” sound the same, but have very different meanings. Input biasing can be done through Bot CMS to ensure your cognitive app receives the correct intent.

- Within Meya’s Actions on Google integration settings, you can define the message that a user will receive if what they said had no matching intent. By default, this is “I'm sorry, I don't understand”

- Your cognitive app will leave the conversation and the user will continue chatting with Google Assistant when the bot does not expect a user response. For example, if a flow contains only a meya.text component, the conversation will end after printing this text. Using the “expect_user_action” property will enable you to keep the conversation open. More info can be found in our support documentation.

- Google Assistant prints both text and what is said. By default, you bot will print and say the same thing. This can be modified using the “speech” property.

To help you get started with Actions on Google, we’ve created a simple bot for testing. More information about development for Actions on Google can be found in our support documentation.

Publishing

Before becoming accessible to the public, you’ll need to publish your cognitive app. This will make it accessible in the Actions on Google directory and allow users to start interacting with your bot.

Publishing your bot will provide you with access to analytics including usage, health and how your bot is doing within the directory. More information about publishing your cognitive app can be found in the Actions on Google documentation.

As consumers demand different ways to interact with your cognitive application, we’ll provide the enabling technology. We’re working with companies to take conversations to the next level. Voice is the first in what will be many innovations to come. Stay tuned!

Our team has described how to get started with our Actions on Google support documentation. If you have a more specific question, don’t hesitate to reach out to support@meya.ai!

This integration is available for all Meya.ai accounts on the universal plan. If you're not on our universal plan but are interested in adding this integration to your cognitive app, find some time to speak with our sales team here.

PS: Google has a developer community program where you can get a free t-shirt and google cloud credits if you launch an Actions for Google app by March 31, 2018!